This is part 1 of 3 of my tutorial mini-series on how to build a DL RL AI for Coder One’s Bomberland competition using TensorFlow.

In this part, we’ll cover the deep learning specific Lingo, Making Replays, and converting them to TensorFlow Observations.

Introduction: Talking like a proper DL RL AI practitioner

DL = deep learning = lots of numbers and GPUs RL = reinforcement learning = try out what works AI = artificial intelligence = fancy buzzword for “computer doing stuff”

This might seem like a weird way to start a tutorial, but in case you need to DuckDuckGo for additional information, it really helps to know the words that people in the field use to describe things. And I’m going to assume that you know how the Bomberland game works in general. If not, see here.

In the game, there are two teams (called agents) and they each control 3 units (people also call them pawns) and these units move around on a map with rules enforced by the server (called the environment) and we can create copies of this environment (called gyms) to let our AIs try out new strategies which we record as replay files (and call it sampling).

Our goal here isn’t to design the AI by hand, but to let it learn by interacting with the environment and hopefully it’ll do some good things randomly which we can then repeat. That’s called reinforcement learning or RL because we reinforce desired behaviour. And since we call the virtual environment a gym, we’ll call the learning process training. But we still need plenty of luck to see useful behaviour in the first place. That’s why we usually don’t let the AI deduce how the world works from scratch, but we do pre-training to increase the chance of something useful happening during the actual training. The most common way to do pre-training is to show the AI how things are done correctly, and we’ll call that behavioural cloning or BC because the goal for the AI is to clone the example behaviour that we show to it.

During the game, we will feed data about the environment into the AI and then the AI will decide what to do. To highlight that the AI cannot directly change the environment state, but only affect it by its actions as determined by the game server, we call the data that goes into the AI an observation. A collection of multiple observations over time is then called a trace or a trajectory or simply a replay.

After the AI does its mathematical magic, it will usually decide what to do, which we call the action. Most of the time, an AI isn’t 100% sure but more like 90% left 10% up in which case we get a likelihood distribution over all possible actions. That fancy word just means the AI will estimate a percentage for each possible action how likely it is that doing that is a good idea. And lastly, a likelihood distribution can be calculated in a more numerically stable way by transforming it into logits. You don’t really need to understand how they work mathematically, but it is helpful to remember that a positive logit means the AI thinks that’s a good idea and a negative logit means the AI thinks that’s a bad idea. So in practice, people usually pick the action with the highest logit because that means we have the maximum likelihood of doing the correct thing.

The calculations by which the AI turns an observation into action logits is what we call a policy. A very simple policy would be to just generate random numbers, meaning the AI takes actions randomly. One could also code a policy by hand, for example to create an opponent for your DL RL AI to train against.

And then, after the AI has decided on an action, we try out what happens and assign a score or reward for the AI. If good things happen, we give a positive reward. If bad things happen, we give a negative reward. We’ll call the current situation that the AI is in the state of the world. If this situation typically leads to good things, then the state will have a positive value. If we’re about to lose the game, that situation will have a very negative state value. Nobody really knows the correct state value, because if you did, you would not need reinforcement learning anymore, because you could just pick the action that leads to the best future state. But we can mathematically approximate the state value as a discounted sum of future rewards, meaning we just add up all the rewards for all the things that we expect to happen and then that’s our estimate for the state value.

High-level Overview: How does DL RL AI training work?

The goal of deep learning (DL) reinforcement learning (RL) artificial intelligence (AI) training is to make the computer do something that looks intelligent by randomly trying out stuff and then reinforcing good behaviour with mathematics. That means our training process basically has 3 steps:

samplethepolicyto get (observation,action,reward) tuples. Or in regular words: We let our current AI play the game and we write down what it sees, what it did, and how that turned out.- estimate

state valuesfrom ourtrajectoriesusing thebellman backup operator. Or in regular words: We look at the replays that we created in the first step and then for each action that the AI did we calculate the sum of rewards for things that happened afterwards. We can pretend that we can look into the future here (bellman operator) because we’re watching a recording of a game that already finished. - update the

policyto adjust theaction logitsto maximize theexpected future rewards. Or in regular words: From step 2 we now have numbers for each game state to describe how well doing that worked out on average, so now we can adjust the chance of taking a given action based on the scores that each action leads to. Obviously, we want to do more of what works well.

Once we have done those 3 steps once, we can then repeat the whole process with our new (and hopefully improved) policy. If you start with random behaviour and repeat this process about 50 times, you’ll end up with an AI that plays well.

For pre-training with behavioural cloning, we can skip step #2. The idea is that we just imitate what someone else is doing, so we’re going to assume that they know what they are doing and then in step #3 instead of comparing the state values for different possible actions, we just always choose what our teacher showed us.

Making Replays

If you’re lazy or you want a quick path to success, you can just download my replay collection from GitHub. But typically, when you start working on a new AI solution, there won’t be any good replay collections available. Thankfully, we can still build a policy anyway. We’ll just fill all parameters with random numbers and the AI will decide on pseudo-random actions in a somewhat deterministic way. Letting the AIs play randomly once won’t help. But letting 2 random AIs duel for 10000 matches will surely lead to us seeing some useful/desirable actions. In the end, this just boils down to stochastics: If there’s a chance of x that the AI does something right, you need to try about -4.6/log(1-x) times to see it happen. So if the chance that the AI randomly places a good bomb is 1 out of 1000, you need to let it play roughly 4600 games to be 99% sure that you’ll see it.

Also, you can drastically speed up the learning process of your AI by pre-training it to imitate a known-good teacher. We’ll be doing that here by using our Bomberland entry lucky-lock-2448 which is the docker image public.docker.cloudgamepad.com/gocoder/oiemxoijsircj-round3sub-s1555. If you have your own docker image from somewhere, feel free to use that instead. For example, after you have trained your first own AI, you can then use it here for subsequent rounds to generate training data off your own AI to make sure it’ll learn new strategies. You could then also generate replays with your AI playing against my lucky-lock-2448, for example, to simulate the competition on your machine.

Import dependencies

1 2 3 4 5 6 7 8 9 | |

Configuration

1 2 3 4 5 6 | |

Helpers

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 | |

Sample the Replays

This will take roughly 90s per game, so 23 minutes for 16.

If you turn SERVER_PORT into an os.env parameter, you can easily start many scripts to sample multiple replays in parallel, for example 1 per CPU core. That said, letting the AIs play to create the replays was also the slowest part for us.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 | |

100%|██████████| 16/16 [24:22<00:00, 91.42s/it]

Creating TensorFlow Observations

Now that we have the replay files, we need to analyze them to extract 3 pieces of information which are needed for AI training:

1. observation We need to convert the JSON game state into some sort of numerical array (one for each unit) which the AI can analyze

2. action The replay files do not explicitly list which action was taken by which unit at which time, so we will need to guess what action each unit took based on what we observe.

3. reward We need to encode how good (or bad) each change during the game was for each of our units. Actually, we can also do the same for “enemy” units, to also learn from their tricks and mistakes. Typically, people score the rewards on a -1 .. 1 scale.

Technically, we will approach this by reading each replay file, replaying all the JSON packets from the server to generate a trajectory as a python array of game states, and then working through the game tick by tick to generate the needed data. To help us with that, we’re going to use the GameState class from the official starter pack.

1

| |

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 5717 100 5717 0 0 16334 0 --:--:-- --:--:-- --:--:-- 16334

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 | |

Let’s test our replay file reader to see what the per-tick JSON state looks like:

1 2 | |

{'game_id': 'c15d43fe-2775-4b95-ba1a-511638724310', 'agents': {'a': {'agent_id': 'a', 'unit_ids': ['c', 'e', 'g']}, 'b': {'agent_id': 'b', 'unit_ids': ['d', 'f', 'h']}}, 'unit_state': {'c': {'coordinates': [2, 0], 'hp': 3, 'inventory': {'bombs': 2}, 'blast_diameter': 3, 'unit_id': 'c', 'agent_id': 'a', 'invulnerability': 0}, 'd': {'coordinates': [12, 0], 'hp': 3, 'inventory': {'bombs': 2}, 'blast_diameter': 3, 'unit_id': 'd', 'agent_id': 'b', 'invulnerability': 0}, 'e': {'coordinates': [12, 12], 'hp': 3, 'inventory': {'bombs': 3}, 'blast_diameter': 3, 'unit_id': 'e', 'agent_id': 'a', 'invulnerability': 0}, 'f': {'coordinates': [2, 12], 'hp': 3, 'inventory': {'bombs': 3}, 'blast_diameter': 3, 'unit_id': 'f', 'agent_id': 'b', 'invulnerability': 0}, 'g': {'coordinates': [6, 9], 'hp': 3, 'inventory': {'bombs': 3}, 'blast_diameter': 3, 'unit_id': 'g', 'agent_id': 'a', 'invulnerability': 0}, 'h': {'coordinates': [7, 9], 'hp': 3, 'inventory': {'bombs': 3}, 'blast_diameter': 3, 'unit_id': 'h', 'agent_id': 'b', 'invulnerability': 0}}, 'entities': [{'created': 0, 'x': 14, 'y': 12, 'type': 'm'}, {'created': 0, 'x': 0, 'y': 12, 'type': 'm'}, {'created': 0, 'x': 4, 'y': 6, 'type': 'm'}, {'created': 0, 'x': 10, 'y': 6, 'type': 'm'}, {'created': 0, 'x': 6, 'y': 4, 'type': 'm'}, {'created': 0, 'x': 8, 'y': 4, 'type': 'm'}, {'created': 0, 'x': 5, 'y': 6, 'type': 'm'}, {'created': 0, 'x': 9, 'y': 6, 'type': 'm'}, {'created': 0, 'x': 13, 'y': 10, 'type': 'm'}, {'created': 0, 'x': 1, 'y': 10, 'type': 'm'}, {'created': 0, 'x': 8, 'y': 14, 'type': 'm'}, {'created': 0, 'x': 6, 'y': 14, 'type': 'm'}, {'created': 0, 'x': 8, 'y': 12, 'type': 'm'}, {'created': 0, 'x': 6, 'y': 12, 'type': 'm'}, {'created': 0, 'x': 11, 'y': 5, 'type': 'm'}, {'created': 0, 'x': 3, 'y': 5, 'type': 'm'}, {'created': 0, 'x': 1, 'y': 12, 'type': 'm'}, {'created': 0, 'x': 13, 'y': 12, 'type': 'm'}, {'created': 0, 'x': 13, 'y': 4, 'type': 'm'}, {'created': 0, 'x': 1, 'y': 4, 'type': 'm'}, {'created': 0, 'x': 5, 'y': 3, 'type': 'm'}, {'created': 0, 'x': 9, 'y': 3, 'type': 'm'}, {'created': 0, 'x': 6, 'y': 6, 'type': 'm'}, {'created': 0, 'x': 8, 'y': 6, 'type': 'm'}, {'created': 0, 'x': 5, 'y': 0, 'type': 'm'}, {'created': 0, 'x': 9, 'y': 0, 'type': 'm'}, {'created': 0, 'x': 1, 'y': 14, 'type': 'm'}, {'created': 0, 'x': 13, 'y': 14, 'type': 'm'}, {'created': 0, 'x': 8, 'y': 8, 'type': 'm'}, {'created': 0, 'x': 6, 'y': 8, 'type': 'm'}, {'created': 0, 'x': 4, 'y': 13, 'type': 'm'}, {'created': 0, 'x': 10, 'y': 13, 'type': 'm'}, {'created': 0, 'x': 0, 'y': 2, 'type': 'm'}, {'created': 0, 'x': 14, 'y': 2, 'type': 'm'}, {'created': 0, 'x': 3, 'y': 7, 'type': 'm'}, {'created': 0, 'x': 11, 'y': 7, 'type': 'm'}, {'created': 0, 'x': 0, 'y': 4, 'type': 'm'}, {'created': 0, 'x': 14, 'y': 4, 'type': 'm'}, {'created': 0, 'x': 8, 'y': 13, 'type': 'm'}, {'created': 0, 'x': 6, 'y': 13, 'type': 'm'}, {'created': 0, 'x': 12, 'y': 1, 'type': 'm'}, {'created': 0, 'x': 2, 'y': 1, 'type': 'm'}, {'created': 0, 'x': 12, 'y': 4, 'type': 'm'}, {'created': 0, 'x': 2, 'y': 4, 'type': 'm'}, {'created': 0, 'x': 6, 'y': 7, 'type': 'm'}, {'created': 0, 'x': 8, 'y': 7, 'type': 'm'}, {'created': 0, 'x': 0, 'y': 3, 'type': 'm'}, {'created': 0, 'x': 14, 'y': 3, 'type': 'm'}, {'created': 0, 'x': 4, 'y': 7, 'type': 'm'}, {'created': 0, 'x': 10, 'y': 7, 'type': 'm'}, {'created': 0, 'x': 1, 'y': 8, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 13, 'y': 8, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 3, 'y': 3, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 11, 'y': 3, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 14, 'y': 8, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 0, 'y': 8, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 11, 'y': 0, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 3, 'y': 0, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 12, 'y': 8, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 2, 'y': 8, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 14, 'y': 14, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 0, 'y': 14, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 1, 'y': 5, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 13, 'y': 5, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 6, 'y': 10, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 8, 'y': 10, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 10, 'y': 14, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 4, 'y': 14, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 5, 'y': 13, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 9, 'y': 13, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 14, 'y': 7, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 0, 'y': 7, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 9, 'y': 8, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 5, 'y': 8, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 0, 'y': 6, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 14, 'y': 6, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 2, 'y': 9, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 12, 'y': 9, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 2, 'y': 7, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 12, 'y': 7, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 4, 'y': 4, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 10, 'y': 4, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 4, 'y': 11, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 10, 'y': 11, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 8, 'y': 3, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 6, 'y': 3, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 6, 'y': 1, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 8, 'y': 1, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 10, 'y': 12, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 4, 'y': 12, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 9, 'y': 7, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 5, 'y': 7, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 0, 'y': 11, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 14, 'y': 11, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 13, 'y': 2, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 1, 'y': 2, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 2, 'y': 2, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 12, 'y': 2, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 11, 'y': 14, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 3, 'y': 14, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 3, 'y': 6, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 11, 'y': 6, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 4, 'y': 1, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 10, 'y': 1, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 5, 'y': 4, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 9, 'y': 4, 'type': 'w', 'hp': 1}, {'created': 0, 'x': 3, 'y': 11, 'type': 'o', 'hp': 3}, {'created': 0, 'x': 11, 'y': 11, 'type': 'o', 'hp': 3}, {'created': 0, 'x': 12, 'y': 5, 'type': 'o', 'hp': 3}, {'created': 0, 'x': 2, 'y': 5, 'type': 'o', 'hp': 3}, {'created': 0, 'x': 8, 'y': 0, 'type': 'o', 'hp': 3}, {'created': 0, 'x': 6, 'y': 0, 'type': 'o', 'hp': 3}, {'created': 0, 'x': 13, 'y': 9, 'type': 'o', 'hp': 3}, {'created': 0, 'x': 1, 'y': 9, 'type': 'o', 'hp': 3}, {'created': 0, 'x': 1, 'y': 1, 'type': 'o', 'hp': 3}, {'created': 0, 'x': 13, 'y': 1, 'type': 'o', 'hp': 3}, {'created': 0, 'x': 9, 'y': 11, 'type': 'o', 'hp': 3}, {'created': 0, 'x': 5, 'y': 11, 'type': 'o', 'hp': 3}, {'created': 0, 'x': 10, 'y': 5, 'type': 'o', 'hp': 3}, {'created': 0, 'x': 4, 'y': 5, 'type': 'o', 'hp': 3}, {'created': 3, 'x': 2, 'y': 0, 'type': 'b', 'unit_id': 'c', 'agent_id': 'a', 'expires': 43, 'hp': 1, 'blast_diameter': 3}, {'created': 3, 'x': 12, 'y': 0, 'type': 'b', 'unit_id': 'd', 'agent_id': 'b', 'expires': 43, 'hp': 1, 'blast_diameter': 3}], 'world': {'width': 15, 'height': 15}, 'tick': 3, 'config': {'tick_rate_hz': 10, 'game_duration_ticks': 300, 'fire_spawn_interval_ticks': 2}}

Guessing the Action

For training our AI, we need to know which action was taken at each time step. This is not recorded in the replay files, but we can guess it based on the changes in the GameState JSON:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 | |

1 2 3 4 | |

at tick 0 unit c did action right

Guessing the Reward

For the AI to know what’s good and what’s bad, we need to encode the quality of any action as a number, which we then call that action’s reward. Depending on the AI to be trained, these reward functions can be super complicated. But most of the time, if you use a highly complex reward function, the AI will learn to exploit mistakes in your assumptions, rather than actually becoming competent. As such, my advice would be to go with a simple reward function. We simply counted how many HP points each team lost and then used the difference as the reward, meaning that for +100% reward the AI needs to successfully attack all enemies while its team takes no damage at all.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 | |

NOTE: Typically one would calculate the reward for each tick, but here I’m going to calculate it over the entire game for illustration purposes so that we hopefully see some number != 0

1 2 3 | |

the long-term reward for unit c is 2.0 . game_over? False

Modeling the AI Observation

This is the critical part for obtaining a good AI. The game server gives us a JSON blob which we need to organize into numerical arrays that our AI can work on. Deep learning AIs usually treat the input surface as smooth, which means that they implicitly assume that a similar observation requires a similar action. That is, in general, reasonable. But it means we need to make sure that small unnecessary changes in the game environment do not trigger large numerical changes in the observations. Also, this means that we need to make sure that every drastic change in gameplay situation for the AI is represented as a large numerical change in the observation.

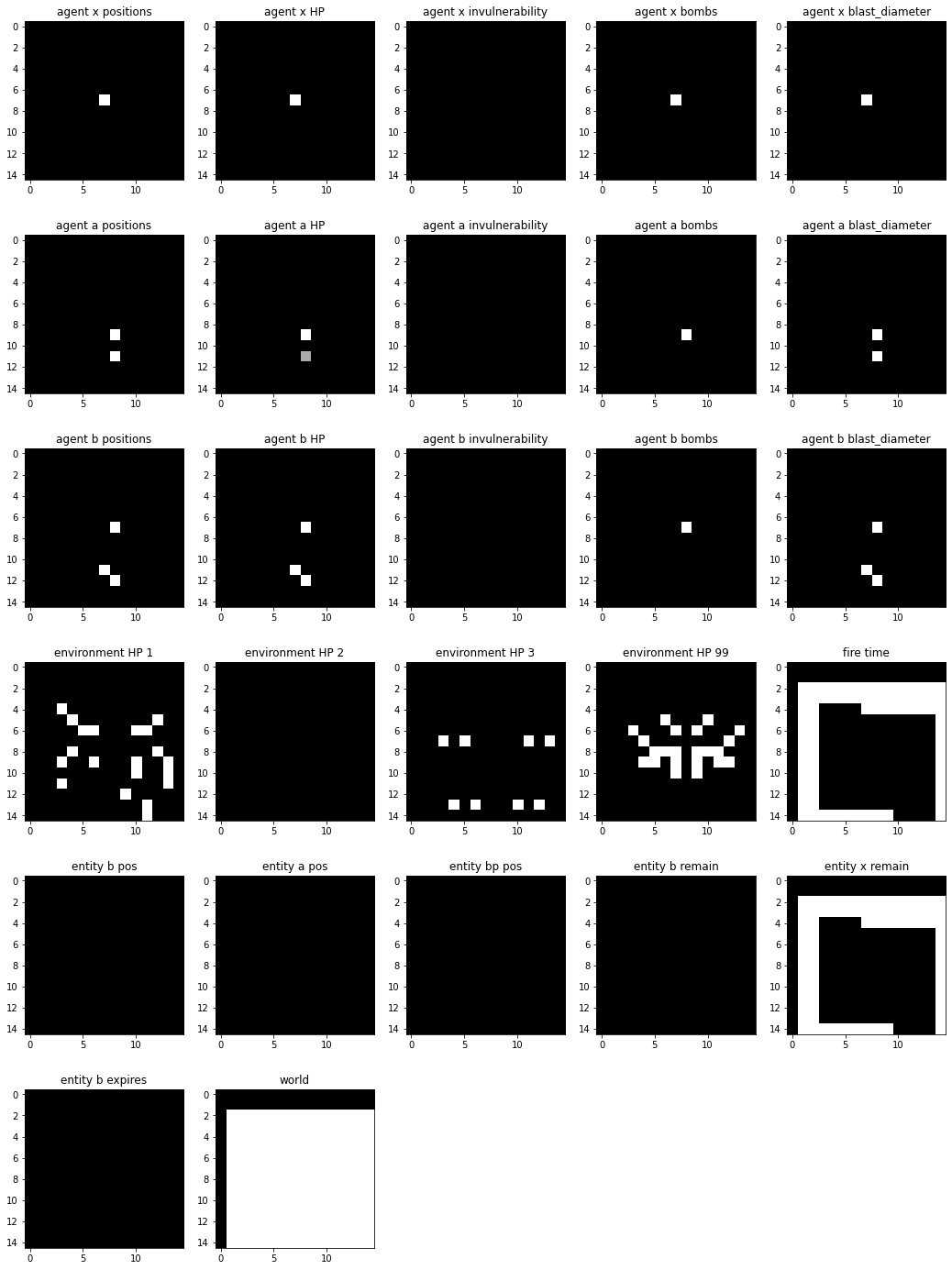

During the competition, we had plenty of discussions about this, but basically, we agreed that: – the observation needs to be centered on the unit, because having the same wall shape at a different position on the map still usually requires the same actions – the identity of enemy units doesn’t matter, meaning we can merge all of them into one map – positions, HP, invulnerability, bombs, bomb diameter, etc. are encoded as spatial one-hot maps, so that when an enemy with bombs and invulnerability moves closer, we will have multiple feature maps have large numerical changes, because this is a significant event

If you want to train your own AI that after some training reliably wins against lucky-lock-2448, this is the place to modify and tweak.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 | |

Testing 123 …

Let’s try out our AI observation by visualizing a tick in the lategame. You can see the unit positions, who still as bombs, and where the fire is.

1 2 3 4 5 6 7 8 9 | |

Writing the dataset for TensorFlow

As the last step in this tutorial, we are now going to bring everything together by using calculate_observation_based_on_gamestate, guess_action_based_on_gamestate_change, and guess_reward_based_on_gamestate_change to convert the trajectory array we created with load_replay_file_as_trajectory into a Numpy .NPY file which can be efficiently read and used for training a DL AI model using TensorFlow (or any other Python-based AI toolkit).

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 | |

1 2 3 4 5 6 7 8 | |

100%|██████████| 16/16 [01:27<00:00, 5.44s/it]

See you in the next tutorial

And that’s it for now. I’ll use this converter on my replay collection from https://github.com/fxtentacle/gocoder-bomberland-dataset . You can either do that, too, by copying files into the ./replays folder or you can generate more fresh replays and convert those. In the next tutorial I’ll show you how to design a basic DL RL AI decision model and train it on the replays with BC = behavioral cloning.